Spanning the Dataverse with a Unified Data Strategy and Data Persona Analytics – Part 2: An Alternative Approach

In the realm of 21st century data organization, the business function comes first. The form of the data and the tools to manage that data will be created and maintained for the singular purpose of maximizing a business’s capability of leveraging its data. Initially, this seems like an obvious statement but when examining the manner in which IT has treated data over the past four decades it becomes painfully obvious that the opposite idea has been predominant. The data controls the business.

A company acquires a data management product and then form fits the data to the limitations and features of that product. This phenomenon usually follows the pattern of the purchase, at an astronomically high price, of an RDBMS product. The purchase is followed by the deployment and implementation of that product. Throughout this process highly skilled and expensively trained “experts” toil thousands of hours dismissing uncertainty from every area of the business in regards to the wisdom of their specific choices. Later, they struggle with a variety of technical flaws, commonly called bugs, on every level of the stack. Then they rationalize the lack of compatibility between the various pieces of the stack that were never engineered to work together and finally they squeeze the existing data into the RDBMS. Eventually, when it all seems to work, they declare this paragon of pure unstable equilibrium to be suitable for the business to risk its very existence upon and roll it into production.

From this point forward, the business survives or fails based on those efforts. The business attempts to avoid or at least minimize the negative effects of the continuously surfacing flaws in the design and the inevitability of catastrophic failures brought on by the fact that the system actually must be utilized. Avoiding the hazards is as futile an effort as running through the rain and avoiding getting wet by dodging the individual raindrops.

This Data-Armageddon-like scenario is not only common in the world of 20th century IT but it is the standard. However, this does not constitute the most significant calumny of 20th century IT. That honor belongs to the restricted manner in which data is managed. Typically data access has been limited to the applications that can navigate the constrictions of the RDBMS and the restrictions imposed due to perceived security risks.

Data access is slowed as numerous repetitive and duplicative processes compete for processing cycles and often block or at least restrict access for the more pertinent business demands. Each of these pitfalls is due to the intrinsic limitations innate in the RDBMS. Multitudes of systems use copies of data so that application silos can operate in total isolation. In these deployments it is often the case that unlimited numbers of backups are taken, data is duplicated, triplicated and indefinitely stored. Data that has little need for sophisticated integrity mechanisms or security constraints is gathered together and bound to that data with the greatest need for integrity constraints and protection. The aggregate of each of these impositions results in the data being impossible to utilize in a timely and effective fashion. The most valuable asset to any company is often rendered worthless and the applications that use the data reduced to nearly useless.

In the Utopian world of data management, all manners of data ingestion, access, storage, alteration and protection are possible independently and simultaneously. Often, a business needs to process data with near boundless speed. Also multiple application functions must access the same data concurrently while maintaining highly secure levels of protection. The requirements are dynamic, moment to moment, and the data must flow freely from application to application and system to system. In fact the number of permutations of possible aspirational requirements for functionality approaches infinity.

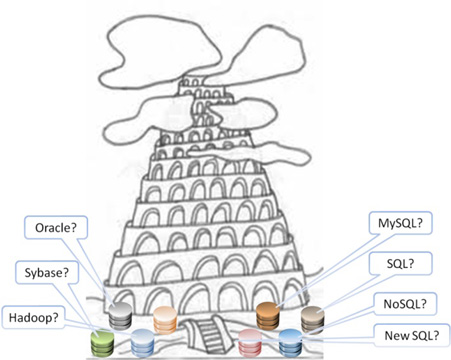

Figure 1

Data scientists understand that before a data management methodology is determined and before a stack is conceived the business functions must be clearly and unambiguously understood and defined. Questions such as the following must be answered:

- How do we need to gather data?

- How do we store data long-term vs. short-term?

- How much data needs to be gathered?

- How do we segregate data that needs to be protected from loss, unauthorized access or most importantly unknown manipulation?

- How much time do we have to complete a transaction? What is a transaction?

- What data needs to be analyzed to determine trends vs. what data need to be analyzed to allow for instant and critical decisions to be made?